PyTorch

Tutorials

Google Colaboratory - MNIST/MLP

https://colab.research.google.com/drive/1ZuHlt_KWNEaxjzyHC6hI_cU8RMleH4tP

Google Colaboratory - MNIST/MLP via Optuna

https://colab.research.google.com/drive/1lO_0cBKVBSfJekuMUZLUTfpz8S2rIxLL

Google Colaboratory - MNIST/CNV

https://colab.research.google.com/drive/133EFmZ2Si7zJwZFCDo8P1gc-Wmt5ggCV

Google Colaboratory - MNIST/CNV as Regression

https://colab.research.google.com/drive/1FnUbW9L_Nd_1_GDReDSnEKkA2wroff2K

Google Colaboratory - MNIST/ResNet with Transfer Learning

https://colab.research.google.com/drive/1X4Wg2sUxxiK4eBYBNiI0LbY0FtL_oMrK

Google Colaboratory - MNIST/ResNet as Regression

https://colab.research.google.com/drive/1bHoW7T3w3TDn9oBHodlMP5hiTdz0fQ4V

Google Colaboratory - MNIST/ViT(Vision Transformer) with Transfer Learning

https://colab.research.google.com/drive/1dGdmpv-OnSqTKeelK4bNxHHwBjVnPDVj

Google Colaboratory - CIFAR10/CNV

https://colab.research.google.com/drive/1AyaCwUtoNOYYkLNFpReRQ4OF2e_wsRIG

Google Colaboratory - CIFAR10/ResNet with Transfer Learning

https://colab.research.google.com/drive/18z7htQBenZZrt9QD6rcvmpMUdlvt_TuE

Google Colaboratory - Custom Dataset/MLP

https://colab.research.google.com/drive/1oK84ZsB3qL0e-puriV3gF7_-dU3mhOcg

Google Colaboratory - Custom Dataset/MLP via Optuna

https://colab.research.google.com/drive/1Ld46dKvRYgd7NPuyRoql1-LiqiCtikia

Google Colaboratory - PyTorch+ONNX/SwinTransformer

https://colab.research.google.com/drive/1KHDqzMGlvm64F5GtqqFS7tIq9gn7z6sL

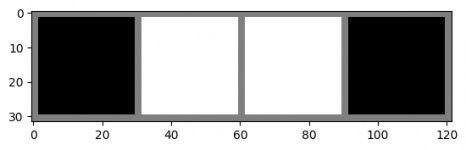

Custom Dataset

Results

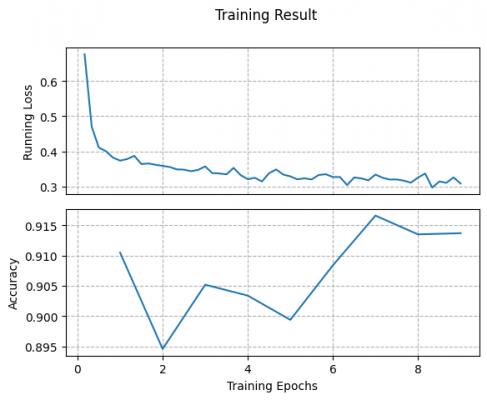

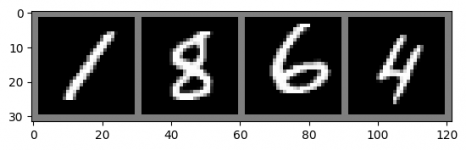

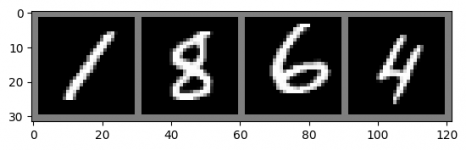

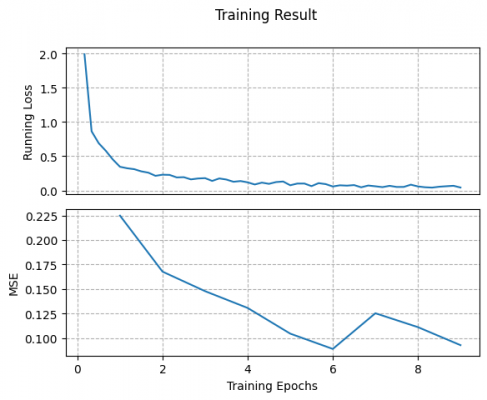

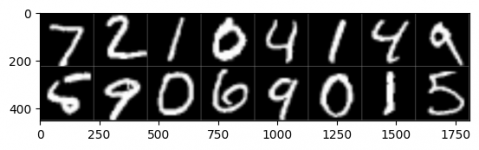

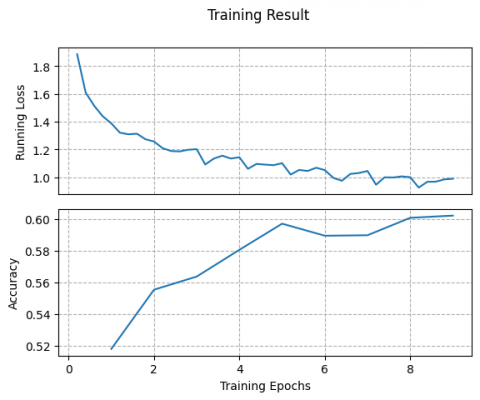

MNIST/MLP

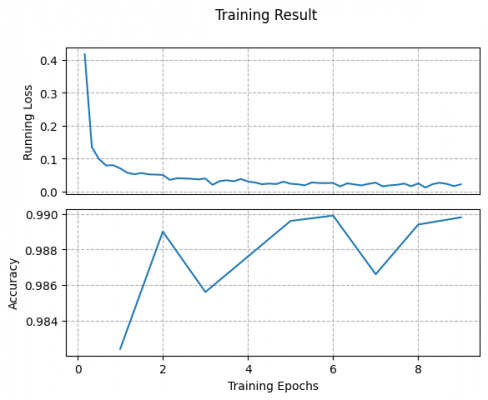

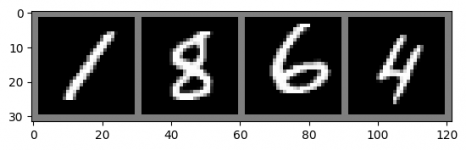

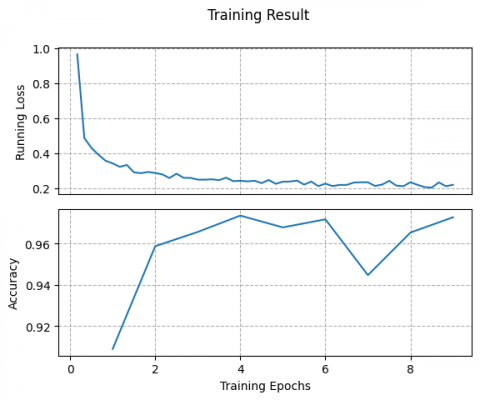

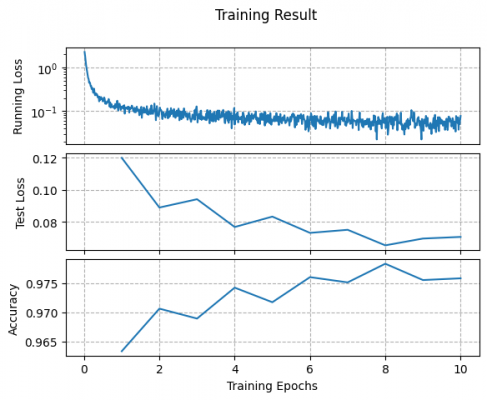

MNIST/CNV

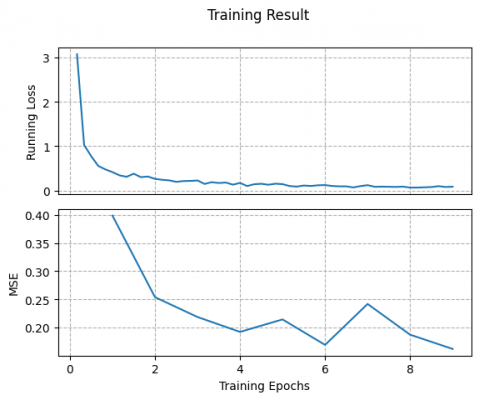

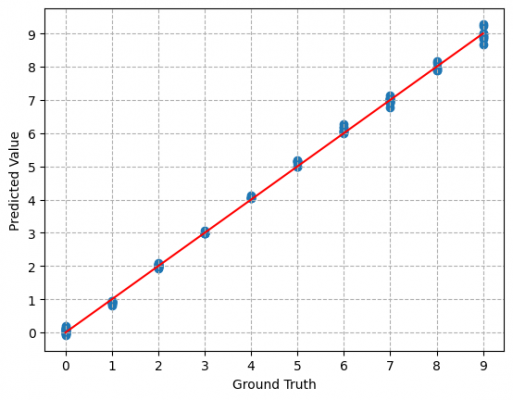

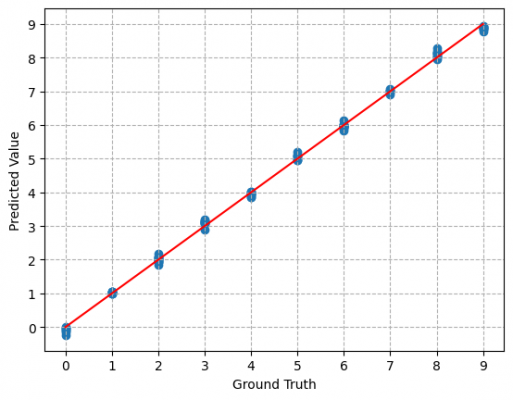

MNIST/CNV as Regression

MNIST/ResNet with Transfer Learning

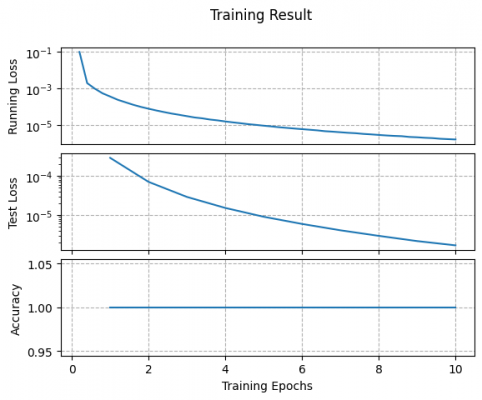

MNIST/ResNet as Regression

MNIST/ViT(Vision Transformer) with Transfer Learning

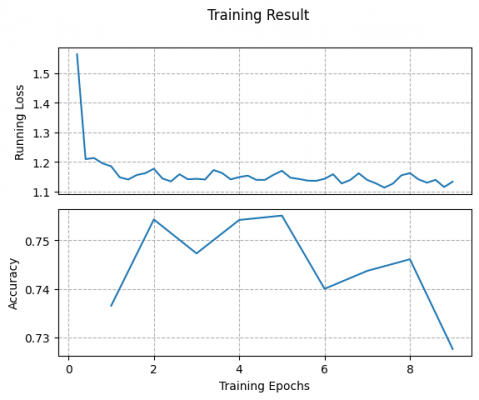

CIFAR10/CNV

CIFAR10/ResNet with Transfer Learning

Custom Dataset/MLP

PyTorch+ONNX/SwinTransformer

https://pytorch.org/docs/stable/onnx.html

366 n02480855 gorilla, Gorilla gorilla 7.715928 741 n03998194 prayer rug, prayer mat 6.302113 367 n02481823 chimpanzee, chimp, Pan troglodytes 5.2681127 371 n02486261 patas, hussar monkey, Erythrocebus patas 4.407563 365 n02480495 orangutan, orang, orangutang, Pongo pygmaeus 3.6513

Usage

Save/Load

torch.save(model.state_dict(), 'model.pth')

model = NetworkClass(*args, **kwargs)

model.load_state_dict(torch.load('model.pth'))

Troubleshooting - No CUDA Device

## RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False.

## If you are running on a CPU-only machine, please use torch.load with map_location=torch.device('cpu') to map your storages to the CPU.

model.load_state_dict(torch.load('model.pth', map_location=torch.device('cpu')))

Transforms

https://pytorch.org/vision/stable/generated/torchvision.transforms.Compose.html

https://pytorch.org/vision/stable/generated/torchvision.transforms.ToTensor.html

https://pystyle.info/pytorch-list-of-transforms/

https://blog.kikagaku.co.jp/pytorch-torchvision

transform = transforms.Compose([transforms.ToTensor()]) transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]) transform = transforms.Compose([transforms.CenterCrop(224), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

The sequence of transforms is important: transforms.Normalize to [-1, 1] should be after transforms.ToTensor to [0, 1] from an original image array in the range [0, 255] as numpy.uint8

Information - Automatic Image Augmentation

https://sebastianraschka.com/blog/2023/data-augmentation-pytorch.html

transform = transforms.Compose([transforms.AutoAugment(), transforms.ToTensor()]) transform = transforms.Compose([transforms.RandAugment(), transforms.ToTensor()]) transform = transforms.Compose([transforms.AugMix(), transforms.ToTensor()]) transform = transforms.Compose([transforms.TrivialAugmentWide(), transforms.ToTensor()])

Network Design

See Deep Learning for details

Information - Softmax Output

https://pytorch.org/docs/stable/generated/torch.nn.CrossEntropyLoss.html

https://qiita.com/y629/items/1369ab6e56b93d39e043

https://qiita.com/ground0state/items/8933f9ef54d6cd005a69

https://qiita.com/takurooo/items/e356dfdeec768d8f7146

## [IMPORTANT] Softmax should not be used at the output layer if using CrossEntropyLoss !!!

def __init__(self) :

super(NET, self).__init__()

self.fc = nn.Linear(input, output)

def forward(self, x) :

x = self.fc(x)

return x

criterion = nn.CrossEntropyLoss()

## Above calculation is equivalent to the combination of LogSoftmax + NLLLoss so that confirming softmax probabilities is available

def __init__(self) :

super(NET, self).__init__()

self.fc = nn.Linear(input, output)

self.logsoftmax = nn.LogSoftmax(dim=1)

def forward(self, x) :

x = self.logsoftmax(self.fc(x))

return x

criterion = nn.NLLLoss()

Information - Examples of Node Calculations of Convolutional Network

## FINN CNV inspired by BinaryNet and VGG-16 for (3, 32, 32) Input Images self.conv1 = nn.Conv2d(3, 64, 3) ## 003x(032x032) -> 064x(030x030) self.conv2 = nn.Conv2d(64, 64, 3) ## 064x(030x030) -> 064x(028x028) self.pool1 = nn.MaxPool2d(2, 2) ## 064x(028x028) -> 064x(014x014) self.conv3 = nn.Conv2d(64, 128, 3) ## 064x(014x014) -> 128x(012x012) self.conv4 = nn.Conv2d(128, 128, 3) ## 128x(012x012) -> 128x(010x010) self.pool2 = nn.MaxPool2d(2, 2) ## 128x(010x010) -> 128x(005x005) self.conv5 = nn.Conv2d(128, 256, 3) ## 128x(005x005) -> 256x(003x003) self.conv6 = nn.Conv2d(256, 256, 3) ## 256x(003x003) -> 256x(001x001) self.fc1 = nn.Linear((256 * (1 * 1)), [next])

## Simple Convolutions for (3, 32, 32) Input Images self.conv1 = nn.Conv2d(3, 6, 5) ## 003x(032x032) -> 006x(028x028) self.pool1 = nn.MaxPool2d(2, 2) ## 006x(028x028) -> 006x(014x014) self.conv2 = nn.Conv2d(6, 16, 5) ## 006x(014x014) -> 016x(010x010) self.pool2 = nn.MaxPool2d(2, 2) ## 016x(010x010) -> 016x(005x005) self.fc1 = nn.Linear((16 * (5 * 5)), [next])

https://qiita.com/hiromasat/items/43e20c8da6d21f2578dc

Number of Parameters = ( (Input Channels x Kernel Size) + Bias ) x Output Channels

Custom Loss Function

class RMSELoss(nn.Module) :

def __init__(self) :

super(RMSELoss, self).__init__()

def forward(self, outputs, targets) :

loss = torch.sqrt(torch.mean(torch.abs(outputs - targets) ** 2.0))

return loss

criterion = RMSELoss()

Troubleshooting - Target Size Difference

https://qiita.com/kimisyo/items/579d380b991995976f79

https://stackoverflow.com/questions/61598771/pytorch-squeeze-and-unsqueeze

## UserWarning: Using a target size (torch.Size([16])) that is different to the input size (torch.Size([16, 1000])). ## This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size. ## ... ## RuntimeError: The size of tensor a (1000) must match the size of tensor b (16) at non-singleton dimension 1

## Target sizes of outputs and labels tensors should be the same: loss = criterion(outputs, labels) loss = criterion(outputs.squeeze(-1), labels) loss = criterion(outputs.unsqueeze(-1), labels)

Convert Tensor/NDArray

https://tzmi.hatenablog.com/entry/2020/02/16/170928

## NumPy NDArray to PyTorch Tensor x = torch.from_numpy(x.astype(np.float32)).clone()

## PyTorch Tensor to NumPy NDArray

x = x.to('cpu').detach().numpy().copy()

Troubleshooting - Long-Type Expected in CrossEntropyLoss

## RuntimeError: Expected object of scalar type Long but got scalar type Float

loss = criterion(output, target.long()) loss = criterion(output, target.type(torch.long))

Training

inputs, labels = data[0].to(device), data[1].to(device) optimizer.zero_grad() outputs = net(inputs) loss = criterion(outputs, labels) loss.backward() optimizer.step()

Troubleshooting - Runtime Error

https://discuss.pytorch.org/t/runtimeerror-could-not-create-a-primitive/117519

## RuntimeError: could not create a primitive descriptor iterator

The host machine needs more system memory

Another workaround is preparing additional swap memory: see Swap for instructions

PyTorch Hub

https://pytorch.org/hub/

https://pytorch.org/vision/

https://pytorch.org/vision/stable/models.html

net = torch.hub.load('pytorch/vision', 'resnet18', weights='DEFAULT')

Troubleshooting - Import Error

https://github.com/pytorch/vision/issues/7397

https://github.com/pytorch/vision/issues/6431

## File ~/.cache/torch/hub/pytorch_vision_main/hubconf.py:74 ## 72 from torchvision.models.swin_transformer import swin_b, swin_s, swin_t, swin_v2_b, swin_v2_s, swin_v2_t ## 73 from torchvision.models.vgg import vgg11, vgg11_bn, vgg13, vgg13_bn, vgg16, vgg16_bn, vgg19, vgg19_bn ## ---> 74 from torchvision.models.video import ( ## 75 mc3_18, ## 76 mvit_v1_b, ## 77 mvit_v2_s, ## 78 r2plus1d_18, ## 79 r3d_18, ## 80 s3d, ## 81 swin3d_b, ## 82 swin3d_s, ## 83 swin3d_t, ## 84 ) ## 85 from torchvision.models.vision_transformer import vit_b_16, vit_b_32, vit_h_14, vit_l_16, vit_l_32 ## ## ImportError: cannot import name 'swin3d_b' from 'torchvision.models.video' (/opt/.../python3.8/site-packages/torchvision/models/video/__init__.py)

## Specify an old version number:

net = torch.hub.load('pytorch/vision:v0.13.0', 'resnet18', weights='DEFAULT')

ONNX Export

https://learn.microsoft.com/windows/ai/windows-ml/tutorials/pytorch-analysis-convert-model

https://pytorch.org/tutorials/advanced/super_resolution_with_onnxruntime.html

## Model Definition model = NetworkClass(*args, **kwargs)

## [IMPORTANT] Model as Inference Mode !!! model.eval()

## Preparing Dummy Input Tensor dummy_input = torch.randn(1, 1, 28, 28, requires_grad=True) #dummy_input = torch.randn(1, 3, 32, 32, requires_grad=True)

## ONNX Export torch.onnx.export(model, dummy_input, 'model.onnx', input_names=['input'], output_names=['output'], verbose=True)

Troubleshooting - Bad Node Spec

https://discuss.pytorch.org/t/onnx-custom-operator-runtime-error/

## Traceback (most recent call last): ## File "<stdin>", line 1, in <module> ## File "/opt/conda/lib/python3.8/site-packages/torch/onnx/__init__.py", line 225, in export ## return utils.export(model, args, f, export_params, verbose, training, ## File "/opt/conda/lib/python3.8/site-packages/torch/onnx/utils.py", line 85, in export ## _export(model, args, f, export_params, verbose, training, input_names, output_names, ## File "/opt/conda/lib/python3.8/site-packages/torch/onnx/utils.py", line 662, in _export ## _check_onnx_proto(proto) ## RuntimeError: No Op registered for GreaterOrEqual with domain_version of 9 ## ## ==> Context: Bad node spec: input: "43" input: "44" output: "45" name: "GreaterOrEqual_19" op_type: "GreaterOrEqual"

## Add operator_export_type to torch.onnx.export: torch.onnx.export(model, dummy_input, 'model.onnx', input_names=['input'], output_names=['output'], verbose=True, operator_export_type=torch.onnx.OperatorExportTypes.ONNX_ATEN_FALLBACK)

References

PyTorch

https://pytorch.org/

https://pytorch.org/tutorials/beginner/blitz/cifar10_tutorial.html

https://pytorch.org/tutorials/beginner/basics/data_tutorial.html

https://pytorch.org/hub/pytorch_vision_resnet/

PyTorch入門

https://ex-ture.com/blog/2021/01/01/pytorch-basic/

https://ex-ture.com/blog/2021/01/02/googlecolab-pytorch/

https://ex-ture.com/blog/2021/01/04/pytorch-nn/

https://ex-ture.com/blog/2021/01/08/freehand-accuracy/

https://ex-ture.com/blog/2021/01/11/pytorch-cnn/

https://ex-ture.com/blog/2021/01/11/cnn-cifar10/

https://ex-ture.com/blog/2021/01/12/pytorch-rnn/

https://qiita.com/mathlive/items/8e1f9a8467fff8dfd03c

https://qiita.com/takawamoto/items/42ff569be496621fc016

https://qiita.com/hcchiu1202/items/6d32d679fc97443901a1

https://qiita.com/hcchiu1202/items/7ac09c4c8a7dd4701c28

Acknowledgments

Daiphys is a professional services company in research and development of leading-edge technologies in science and engineering.

Get started accelerating your business through our deep expertise in R&D with AI, quantum computing, and space development; please get in touch with Daiphys today!

Daiphys Technologies LLC - https://www.daiphys.com/