BNN-PYNQ

Getting Started

Publications

See FINN for the publications

Model Structures

https://www.hackster.io/adam-taylor/training-implementing-a-bnn-using-pynq-1210b9

- LFC: 4 fully connected network for 28 x 28 x binary (black/white) input

- CNV: 6 convolution + 2 max pooling + 3 fully connected network for 32 x 32 x 3×8-bits (RGB) input

- The output activation is determined by the sign function: {+1 if x >= 0, -1 if x < 0}

Board Setup

See PYNQ-Z1 for the installation

Installation on Target Board

https://github.com/Xilinx/BNN-PYNQ

sudo apt-get update sudo apt-get upgrade

#sudo pip3 install git+https://github.com/Xilinx/BNN-PYNQ.git sudo pip3 install git+https://github.com/Xilinx/BNN-PYNQ.git --no-build-isolation

Sample Execution on Target Board (CNV-QNN_CIFAR10)

## Run bnn/CNV-QNN_Cifar10.ipynb on Jupyter Notebook

Sample Image

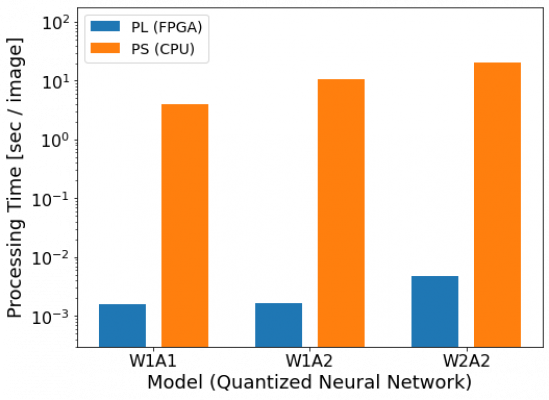

Processing Time: PL-FPGA (100 MHz) vs PS-CPU (650 MHz)

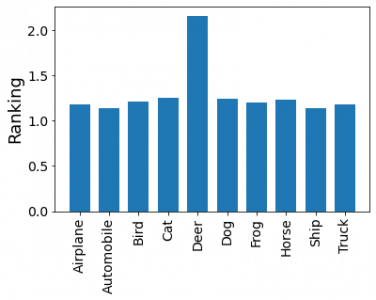

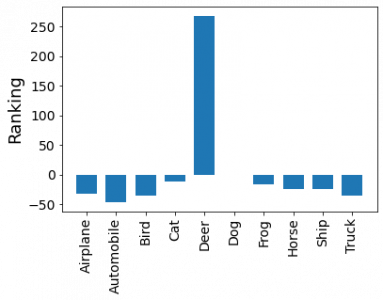

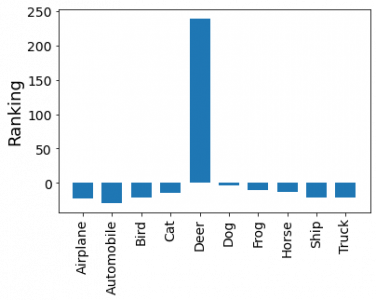

Class Rankings: CNVW1A1/CNVW1A2/CNVW2A2

Training on Host Machine (CNV-QNN_CIFAR10)

Tips

- Training could be not on the FPGA board but on AWS/GCP with GPU

- Install CUDA 10.2 + cuDNN 7.6.5 on Ubuntu 18.04 for faster training with Nvidia GPU

- Latest CUDA is incompatible with training scripts

- Install Anaconda for easy installation

- CPU Runtime: 1000 sec/epoch $\times$ 500 epoch = 140 hours

- GPU Runtime: 30 sec/epoch $\times$ 500 epoch = 4 hours (Tesla T4)

- Python 2.7 is necessary

- Original steps are not functional with the latest libraries in 2023

Install (Original/Failed)

https://github.com/Xilinx/BNN-PYNQ/tree/master/bnn/src/training

## Dependencies sudo apt-get install -y git python-dev libopenblas-dev liblapack-dev gfortran sudo apt-get install g++

## Pip #wget https://bootstrap.pypa.io/get-pip.py && python get-pip.py --user wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py --user

## PATH

export PATH="$HOME/.local/bin${PATH:+:${PATH}}"

## Theano/Lasagne pip uninstall pyyaml pip install pyyaml pip install --user pathlib pip install --user git+https://github.com/Theano/Theano.git@rel-0.9.0beta1 pip install --user https://github.com/Lasagne/Lasagne/archive/master.zip

cat << EOF > ~/.theanorc [global] floatX = float32 device = gpu openmp = True openmp_elemwise_minsize = 200000 [nvcc] fastmath = True [blas] ldflags = -lopenblas [warn] round = False EOF

## PyLearn2 pip install --user numpy==1.11.0 git clone https://github.com/lisa-lab/pylearn2 cd pylearn2 python setup.py develop --user cd

## Data Download export PYLEARN2_DATA_PATH=~/.pylearn2 mkdir -p ~/.pylearn2 cd pylearn2/pylearn2/scripts/datasets python download_mnist.py ./download_cifar10.sh cd

Theano shows some errors here with the latest CUDA 11.8 + cuDNN 8.9.1

The following section is a workaround solution in 2023

Install (Successful)

https://qiita.com/harmegiddo/items/6d7490214b693150cef9

## Anaconda conda create --name pynq python=2.7 conda activate pynq conda install tensorflow=2.1.0=gpu_py27h9cdf9a9_0 conda install pygpu

pip install cython pip install keras==2.3.1 pip install theano==1.0.5 pip install https://github.com/Lasagne/Lasagne/archive/master.zip

cat << EOF > ~/.theanorc [global] floatX = float32 device = cuda openmp = True openmp_elemwise_minsize = 200000 [nvcc] fastmath = True [blas] ldflags = -lopenblas [warn] round = False EOF

Training

git clone https://github.com/Xilinx/BNN-PYNQ.git export XILINX_BNN_ROOT="$HOME/BNN-PYNQ/bnn/src/"

cd ./BNN-PYNQ/bnn/src/training/

The original cifar10.py is modified to avoid using pylearn2 and to use keras for downloading data:

bnn-pynq_cifar10_mod.py.gz

python cifar10_mod.py #python cifar10_mod.py -wb 1 -ab 2 #python cifar10_mod.py -wb 2 -ab 2

Using cuDNN version 7605 on context None Mapped name None to device cuda: Tesla T4 (0000:00:04.0) Using TensorFlow backend. activation_bits = 1 weight_bits = 1 batch_size = 50 alpha = 0.1 epsilon = 0.0001 W_LR_scale = Glorot num_epochs = 500 LR_start = 0.001 LR_fin = 3e-07 LR_decay = 0.983907435305 save_path = cifar10-1w-1a.npz train_set_size = 45000 shuffle_parts = 1 Loading CIFAR-10 dataset... ... Training... Epoch 1 of 500 took 31.9853491783s LR: 0.001 training loss: 0.715714348786407 validation loss: 0.3401033526659012 validation error rate: 57.180000364780426% best epoch: 1 best validation error rate: 57.180000364780426% test loss: 0.33863225907087324 test error rate: 56.81000013649463%

Weight

python cifar10-gen-weights-W1A1.py #python cifar10-gen-weights-W1A2.py #python cifar10-gen-weights-W2A2.py

ls cnvW1A1

0-0-thres.bin 0-9-thres.bin 1-21-thres.bin 1-7-thres.bin 2-6-thres.bin 3-5-thres.bin 0-0-weights.bin 0-9-weights.bin 1-21-weights.bin 1-7-weights.bin 2-6-weights.bin 3-5-weights.bin 0-1-thres.bin 1-0-thres.bin 1-22-thres.bin 1-8-thres.bin 2-7-thres.bin 3-6-thres.bin 0-1-weights.bin 1-0-weights.bin 1-22-weights.bin 1-8-weights.bin 2-7-weights.bin 3-6-weights.bin ... 0-6-thres.bin 1-19-thres.bin 1-4-thres.bin 2-3-thres.bin 3-2-thres.bin 8-0-weights.bin 0-6-weights.bin 1-19-weights.bin 1-4-weights.bin 2-3-weights.bin 3-2-weights.bin 8-1-weights.bin 0-7-thres.bin 1-2-thres.bin 1-5-thres.bin 2-4-thres.bin 3-3-thres.bin 8-2-weights.bin 0-7-weights.bin 1-2-weights.bin 1-5-weights.bin 2-4-weights.bin 3-3-weights.bin 8-3-weights.bin 0-8-thres.bin 1-20-thres.bin 1-6-thres.bin 2-5-thres.bin 3-4-thres.bin classes.txt 0-8-weights.bin 1-20-weights.bin 1-6-weights.bin 2-5-weights.bin 3-4-weights.bin hw

Run on Target Board (PYNQ-Z1)

## Copy weight files to /usr/local/lib/python3.6/dist-packages/bnn/params/original/cnvW1A1 on PYNQ cd /usr/local/lib/python3.6/dist-packages/bnn/params/ mkdir -p original/cnvW1A1

## Run bnn/CNV-QNN_Cifar10.ipynb on Jupyter Notebook

Training on Host Machine (LFC-BNN_MNIST)

Tips

- CPU Runtime: 100 sec/epoch $\times$ 1000 epoch = 30 hours

- GPU Runtime: 2 sec/epoch $\times$ 1000 epoch = 0.5 hours (Tesla T4)

Training

The original mnist.py is modified to avoid using pylearn2 and to use keras for downloading data:

bnn-pynq_mnist_mod.py.gz

python mnist_mod.py #python mnist_mod.py -wb 1 -ab 2

Using cuDNN version 7605 on context None Mapped name None to device cuda: Tesla T4 (0000:00:04.0) Using TensorFlow backend. activation_bits = 1 weight_bits = 1 batch_size = 100 alpha = 0.1 epsilon = 0.0001 num_epochs = 1000 dropout_in = 0.2 dropout_hidden = 0.5 W_LR_scale = Glorot LR_start = 0.003 LR_fin = 3e-07 LR_decay = 0.990831944893 save_path = mnist-1w-1a.npz train_set_size = 50000 shuffle_parts = 1 Loading MNIST dataset... ... Training... Epoch 1 of 1000 took 2.09121918678s LR: 0.003 training loss: 0.26854227716475726 validation loss: 0.044894146784208715 validation error rate: 6.429999990388752% best epoch: 1 best validation error rate: 6.429999990388752% test loss: 0.04777069476665929 test error rate: 6.899999972432852%

Spin-Off Projects

References

https://marsee101.web.fc2.com/pynq.html

https://www.tkat0.dev/study-BNN-PYNQ/

https://qiita.com/harmegiddo/items/6d7490214b693150cef9

https://qiita.com/ykshr/items/6c8cff881a200a781dc3

https://discuss.pynq.io/t/new-pynq-2-7-image-got-modulenotfounderror-no-module-named-pynq-when-install-bnn-pynq/3570/5

https://github.com/Xilinx/BNN-PYNQ/tree/master/bnn/src/training

https://qiita.com/ykshr/items/08147098516a45203761

https://gist.github.com/ykshr/a68403f5b073ba8f117faeb0a14385c1

https://qiita.com/kamotsuru/items/dcd460e04385f2738cd7

Acknowledgments

Daiphys is a professional services company in research and development of leading-edge technologies in science and engineering.

Get started accelerating your business through our deep expertise in R&D with AI, quantum computing, and space development; please get in touch with Daiphys today!

Daiphys Technologies LLC - https://www.daiphys.com/