Table of Contents

MMPretrain

Tutorials

Google Colaboratory - Image Classification with Pretrained ResNet18 on Version 1.2.0

https://colab.research.google.com/drive/1igoW8ryWYXDkcAExicaciFvHHFbscHDa

Google Colaboratory - Custom Dataset Training with ResNet18 on Version 1.2.0

https://colab.research.google.com/drive/1RkrPGyAcS0c6CH3eC07EKKwYrzm7ovlX

Custom Dataset

Getting Started

See MMDetection also for tricks and traps

Troubleshooting - GradCAM Compatibility

https://github.com/open-mmlab/mmyolo/issues/1013

python mmpretrain/tools/visualization/vis_cam.py ... ## TypeError: forward() got an unexpected keyword argument 'use_cuda'

The latest version of grad-cam 1.5.2 is not compatible by missing the 'use_cuda' argument (Confirmed 2024/07/11)

Installing version 1.3.6 as follows fixes this problem

pip install 'grad-cam==1.3.6'

Troubleshooting - CustomDataset KeyError

https://github.com/open-mmlab/mmpretrain/issues/101

python mmpretrain/tools/train.py ... ## KeyError: 'img'

dict(type='LoadImageFromFile') is necessary in pipelines if loading image data from not pickle but image files

Troubleshooting - Missing Task Definition on Inferencer

https://mmpretrain.readthedocs.io/en/stable/_modules/mmpretrain/apis/model.html#inference_model

model = get_model(config_file, pretrained=checkpoint_file, device=device) result = inference_model(model, img) ## No available inferencer for the model

metainfo.results.result.tasks needs to be defined in the model to use inference_model()

The following alternative approach is available for e.g., Image Classification

from mmpretrain.apis import ImageClassificationInferencer inferencer = ImageClassificationInferencer(config_file, pretrained=checkpoint_file, device=device) result = inferencer(img)

Training with Custom Dataset

https://mmpretrain.readthedocs.io/en/latest/user_guides/dataset_prepare.html

https://mmpretrain.readthedocs.io/en/latest/user_guides/train.html

https://mmpretrain.readthedocs.io/en/latest/notes/pretrain_custom_dataset.html

https://mmpretrain.readthedocs.io/en/latest/notes/finetune_custom_dataset.html

Information - Loss Functions

Information - Optimizers

https://pytorch.org/docs/stable/optim.html

optimizer=dict(_delete_=True) removes originally-defined parameters in dict()

Information - Freeze Parameters

https://github.com/open-mmlab/mmpretrain/blob/main/mmpretrain/models/backbones/resnet.py

- frozen_stages = -1 : not freezing any parameters

- frozen_stages = 0 : freezing the stem part only

- frozen_stages = n [n >= 1] : freezing the stem part and first n stages

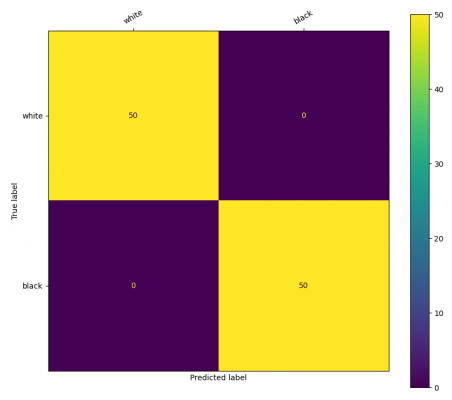

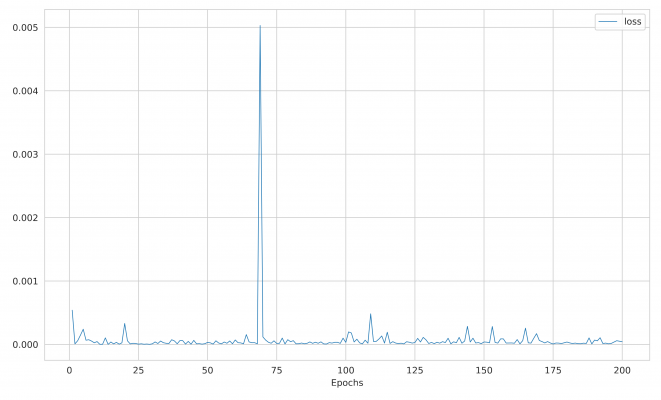

Results

ResNet18

ResNet18 - Custom Dataset Training