Table of Contents

FINN

Getting Started

Publications

https://arxiv.org/abs/1612.07119

https://qiita.com/harmegiddo/items/beb344adddb749c2f1e1

https://www.ntnu.edu/documents/139931/1275097249/eecs-jun17-finn.pdf

System Requirements

https://finn.readthedocs.io/en/latest/getting_started.html#system-requirements

- System Memory: 8-16 GB

Difference of Topologies

- MNIST Interface (i.e., Gray(1)x28x28)

- SFC (Small Fully-Connected): Three-layer fully connected network with 256 neurons/layer (+ output-relevant layer)

- LFC (Large Fully-Connected): Three-layer fully connected network with 1,024 neurons/layer (+ output-relevant layer)

- TFC (Tiny Fully-Connected): Three-layer fully connected network with 64 neurons/layer (+ output-relevant layer)

- CIFAR-10 Interface (i.e., RGB(3)x32x32)

- CNV (Convolutional): Convolutional network inspired by BinaryNet and VGG-16

Custom Network

Host Setup on Ubuntu

Prerequisites

sudo apt install zip sudo apt install unzip

Installation

https://finn.readthedocs.io/en/latest/getting_started.html

git clone https://github.com/Xilinx/finn

export FINN_XILINX_PATH="/tools/Xilinx" export FINN_XILINX_VERSION="2022.1"

## Required for Alveo export PLATFORM_REPO_PATHS="/opt/xilinx/platforms"

## Environment Setup for Vitis source /tools/Xilinx/Vitis/2022.1/settings64.sh

## Jupyter (as needed) export JUPYTER_PORT="8888"

## Netron (as needed) export LOCALHOST_URL="[IPADDR]" export NETRON_PORT="8081"

Quick Test

cd ~/finn

./run-docker.sh quicktest

Running test suite (non-Vivado, non-slow tests) Docker container is named finn_dev_user Docker tag is named xilinx/finn:v0.9-2-gb3bdff11.xrt_202210.2.13.466_18.04-amd64-xrt Mounting /tmp/finn_dev_user into /tmp/finn_dev_user Mounting /opt/Xilinx into /opt/Xilinx Port-forwarding for Jupyter 8888:8888 Port-forwarding for Netron 8081:8081 Vivado IP cache dir is at /tmp/finn_dev_user/vivado_ip_cache Using default PYNQ board Pynq-Z1 Successfully checked out qonnx at commit dd35a8ff49d7225a07ffceeebe25a6361df48349 Successfully checked out finn-experimental at commit 9cbd2787b5160e2b44e0e8164a0df1457dbd5366 Successfully checked out brevitas at commit a5b71d6de1389d3e7db898fef72e014842670f03 Successfully checked out pyverilator at commit 766e457465f5c0dd315490d7b9cc5d74f9a76f4f Successfully checked out cnpy at commit 4e8810b1a8637695171ed346ce68f6984e585ef4 Successfully checked out finn-hlslib at commit d27f6b6c5d8f1bb208db395659389603f63ad4be Successfully checked out oh-my-xilinx at commit d1065a788219ca0eb54d5e57600b1f9d7f67d4cc Successfully checked out avnet-bdf at commit 2d49cfc25766f07792c0b314489f21fe916b639b Already up to date. ... tests/brevitas/test_brevitas_mobilenet.py::test_brevitas_mobilenet [gw0] [ 27%] XPASS tests/brevitas/test_brevitas_mobilenet.py::test_brevitas_mobilenet ... tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-1-1] [gw0] [ 74%] XFAIL tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-1-1] tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-1-2] [gw0] [ 74%] XFAIL tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-1-2] tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-2-1] [gw0] [ 74%] XFAIL tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-2-1] tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-2-2] [gw0] [ 74%] XFAIL tests/transformation/test_qonnx_to_finn.py::test_QONNX_to_FINN[mobilenet-2-2] ... Docs: https://docs.pytest.org/en/stable/how-to/capture-warnings.html 1285 passed, 256 skipped, 4 xfailed, 1 xpassed, 73670 warnings in 385.58s (0:06:25)

Information - Pytest Results

https://zenn.dev/imaki/scraps/8e70706f595cdb

- PASSED

- FAILED

- SKIPPED

- XFAIL: Expected Failure

- XPASS: Unexpected Passing

- ERROR

Troubleshooting - Attrs Dependency 01

https://stackoverflow.com/questions/63277123/what-does-the-error-message-about-pip-use-feature-2020-resolver-mean

https://note.com/npaka/n/n92580a746f2d

## ERROR: After October 2020 you may experience errors when installing or updating packages. ## This is because pip will change the way that it resolves dependency conflicts. ## We recommend you use --use-feature=2020-resolver to test your packages with the new resolver before it becomes the default.

cd ~/finn/docker

vi finn_entrypoint.sh

======================================================

pip install --user -e ${FINN_ROOT}/deps/qonnx --use-feature=2020-resolver

======================================================

Troubleshooting - Attrs Dependency 02

https://github.com/Xilinx/finn/releases/tag/v0.9

FINN v0.9 was released early 2023 and configured with dependent packages of around end 2022

## ERROR: referencing 0.30.0 requires attrs>=22.2.0, but you'll have attrs 19.3.0 which is incompatible. ## jsonschema 4.18.4 requires attrs>=22.2.0, but you'll have attrs 19.3.0 which is incompatible.

cd ~/finn/deps/qonnx

## Adding following dependencies to install_requires

vi setup.cfg

======================================================

install_requires =

referencing==0.8.11

jsonschema==4.17.3

jsonschema-specifications==2022.12.3

jupyter-events==0.6.3

======================================================

Running FINN in Docker

cd ~/finn

## Run Docker Container (or with Jupyter Notebook) #bash ./run-docker.sh bash ./run-docker.sh notebook

Running Jupyter notebook server Docker container is named finn_dev_user Docker tag is named xilinx/finn:v0.9-2-gb3bdff11-dirty.xrt_202210.2.13.466_18.04-amd64-xrt Mounting /tmp/finn_dev_user into /tmp/finn_dev_user Mounting /tools/Xilinx into /tools/Xilinx Port-forwarding for Jupyter 8888:8888 Port-forwarding for Netron 8081:8081 Vivado IP cache dir is at /tmp/finn_dev_user/vivado_ip_cache Using default PYNQ board Pynq-Z1 ... Found Vitis at /tools/Xilinx/Vitis/2022.1 ... Found XRT at /opt/xilinx/xrt Found Vitis HLS at /tools/Xilinx/Vitis_HLS/2022.1

## Run Jupyter Notebook in Docker with Port Forwarding (as needed) #jupyter notebook --allow-root --no-browser --ip=0.0.0.0 --port=8888

[I 02:43:03.433 NotebookApp] Writing notebook server cookie secret to /tmp/...

...

To access the notebook, open this file in a browser:

file:///tmp/home_dir/.local/share/jupyter/runtime/nbserver-6-open.html

Or copy and paste one of these URLs:

http://finn_dev_user:8888/?token=abcdefg

or http://127.0.0.1:8888/?token=abcdefg

TFC Tutorial (TFCW1A1)

Compile Model on Jupyter

Run All on tfc_end2end_example.ipynb

- The ZynqBuild process is killed with only 4GB of a system memory (i.e., n1-standard-1 on GCP)

- The ZynqBuild process takes about 45-60 minutes on a virtual machine with 2 vCPUs (i.e., n1-standard-2 on GCP)

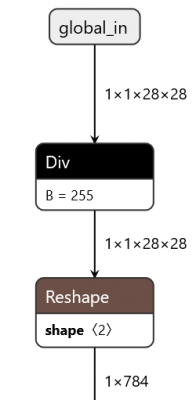

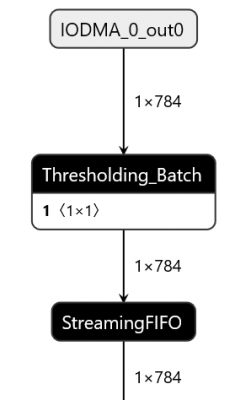

Original Network =  Post Synthesis =

Post Synthesis =

Information - Loading Custom Model trained by Brevitas

Update the following part beginning of the jupyter notebook as below:

## Comment out the line to disable getting the pretrained model from GitHub

#tfc = get_test_model_trained("TFC", 1, 1)

## Add following lines to load a custom model trained by PyTorch/Brevitas

import torch

from brevitas_examples.bnn_pynq.models import model_with_cfg

model, cfg = model_with_cfg('TFC_1W1A', False)

model.load_state_dict(torch.load(build_dir+'/brevitas/checkpoints/best.tar')['state_dict'])

model.eval()

tfc = model

[IMPORTANT] Use brevitas.onnx.export_finn_onnx (not torch.onnx.export) to export a PyTorch model to ONNX for FINN !!!

Information - How to Customize Network

Update the following part before the ZynqBuild section of the jupyter notebook as below:

## Original TFC: 3 layers of 64 neurons (+ output)

config = [

(16, 49, [16], [64], "block"),

(8, 8, [64], [64], "auto"),

(8, 8, [64], [64], "auto"),

(10, 8, [64], [10], "distributed"),

]

## Update Network Example: 4 layers of 32 neurons (+ output)

config = [

(16, 49, [16], [32], "block"),

(8, 8, [32], [32], "auto"),

(8, 8, [32], [32], "auto"),

(8, 8, [32], [32], "auto"),

(10, 8, [32], [10], "distributed"),

]

Troubleshooting - Null Set Property

## ERROR: [Common 17-55] 'set_property' expects at least one object. ## Resolution: If [get_<value>] was used to populate the object, check to make sure this command returns at least one valid object. ## ... ## Exception: CreateStitchedIP failed, no wrapper HDL found under StreamingDataflowPartition_0_wrapper.v ## Please check logs under the parent directory.

This error occurs when ZynqBuild is performed at the step 21 of this tutorial with incompatible Vitis/Vivado 2023.1 (as of July 2023)

Check if Vitis/Vivado versions just follow system requirements; no error with compatible 2022.1

Troubleshooting - Process Killed

## /tools/Xilinx/Vivado/2022.1/bin/loader: line 312: 1033 Killed "$RDI_PROG" "$@"

The 'Killed' message indicates that the process was immediately terminated by the operating system via SIGKILL signal

The host machine needs more system memory; see OoM Killer for more details

Another workaround is preparing additional swap memory: see Swap for instructions

PYNQ Deployment

## Copy and Unzip unzip deploy-on-pynq-tfc.zip -d finn-tfc-demo cd finn-tfc-demo

## Deployment on PYNQ Board sudo python3 -m pip install bitstring sudo python3 driver.py --exec_mode=execute --batchsize=1 --bitfile=resizer.bit --inputfile=input.npy

## Validating Accuracy: installing dataset_loading.git takes about 1-2 hours on PYNQ-Z1 sudo pip3 install git+https://github.com/fbcotter/dataset_loading.git@0.0.4#egg=dataset_loading sudo python3 validate.py --dataset mnist --batchsize 1000

## Original TFC: 3 layers of 64 neurons (pretrained model via GitHub) batch 1 / 10 : total OK 913 NOK 87 batch 2 / 10 : total OK 1800 NOK 200 batch 3 / 10 : total OK 2714 NOK 286 batch 4 / 10 : total OK 3619 NOK 381 batch 5 / 10 : total OK 4535 NOK 465 batch 6 / 10 : total OK 5488 NOK 512 batch 7 / 10 : total OK 6438 NOK 562 batch 8 / 10 : total OK 7399 NOK 601 batch 9 / 10 : total OK 8371 NOK 629 batch 10 / 10 : total OK 9296 NOK 704 Final accuracy: 92.960000 ## Update Network Example: 4 layers of 32 neurons (training of only 1 epoch) batch 1 / 10 : total OK 731 NOK 269 batch 2 / 10 : total OK 1432 NOK 568 batch 3 / 10 : total OK 2136 NOK 864 batch 4 / 10 : total OK 2856 NOK 1144 batch 5 / 10 : total OK 3539 NOK 1461 batch 6 / 10 : total OK 4326 NOK 1674 batch 7 / 10 : total OK 5101 NOK 1899 batch 8 / 10 : total OK 5878 NOK 2122 batch 9 / 10 : total OK 6705 NOK 2295 batch 10 / 10 : total OK 7461 NOK 2539 Final accuracy: 74.610000

## Throughput Test sudo python3 driver.py --exec_mode=throughput_test --batchsize=1000 --bitfile=resizer.bit

## Original TFC: 3 layers of 64 neurons

Results written to nw_metrics.txt

{

'runtime[ms]': 1.2881755828857422,

'throughput[images/s]': 776291.6898019619,

'DRAM_in_bandwidth[MB/s]': 608.612684804738,

'DRAM_out_bandwidth[MB/s]': 0.7762916898019618,

'fclk[mhz]': 100.0,

'batch_size': 1000,

'fold_input[ms]': 0.13446807861328125,

'pack_input[ms]': 0.09417533874511719,

'copy_input_data_to_device[ms]': 5.630970001220703,

'copy_output_data_from_device[ms]': 0.270843505859375,

'unpack_output[ms]': 0.9636878967285156,

'unfold_output[ms]': 0.17762184143066406

}

## Update Network Example: 4 layers of 32 neurons

Results written to nw_metrics.txt

{

'runtime[ms]': 1.2898445129394531,

'throughput[images/s]': 775287.2458410352,

'DRAM_in_bandwidth[MB/s]': 607.8252007393714,

'DRAM_out_bandwidth[MB/s]': 0.7752872458410351,

'fclk[mhz]': 100.0,

'batch_size': 1000,

'fold_input[ms]': 0.1392364501953125,

'pack_input[ms]': 0.09179115295410156,

'copy_input_data_to_device[ms]': 5.3615570068359375,

'copy_output_data_from_device[ms]': 0.2675056457519531,

'unpack_output[ms]': 1.0402202606201172,

'unfold_output[ms]': 0.1819133758544922

}

Troubleshooting - No Module PYNQ as ROOT/SUDO

https://discuss.pynq.io/t/pynq-z2-on-pynq-release-3-0-1/4882

## Traceback (most recent call last): ## File "/home/xilinx/finn/finn-tfc-demo/driver.py", line 34, in <module> ## from driver_base import FINNExampleOverlay ## File "/home/xilinx/finn/finn-tfc-demo/driver_base.py", line 32, in <module> ## from pynq import Overlay, allocate ## ModuleNotFoundError: No module named 'pynq'

## Add following lines to driver.py so that pynq is available as root also:

vi driver.py

======================================================

import sys

sys.path.append("/usr/local/share/pynq-venv/lib/python3.10/site-packages")

======================================================

## Another workaround: sudo -s source /usr/local/share/pynq-venv/bin/activate #export XILINX_XRT="/usr"

Troubleshooting - No Device Found as ROOT/SUDO

https://discuss.pynq.io/t/pynq-z2-on-pynq-release-3-0-1/4882

https://discuss.pynq.io/t/error-no-device-found-when-loading-overlay/2989/6

## /usr/local/share/pynq-venv/lib/python3.10/site-packages/pynq/pl_server/device.py:56: UserWarning: No devices found, is the XRT environment sourced?

## warnings.warn(

## Traceback (most recent call last):

## File "/home/xilinx/finn/finn-tfc-demo/driver.py", line 78, in <module>

## accel = FINNExampleOverlay(

## File "/home/xilinx/finn/finn-tfc-demo/driver_base.py", line 80, in __init__

## super().__init__(bitfile_name, download=download, device=device)

## File "/usr/local/share/pynq-venv/lib/python3.10/site-packages/pynq/overlay.py", line 315, in __init__

## super().__init__(bitfile_name, dtbo, partial=False, device=device)

## File "/usr/local/share/pynq-venv/lib/python3.10/site-packages/pynq/bitstream.py", line 88, in __init__

## device = Device.active_device

## File "/usr/local/share/pynq-venv/lib/python3.10/site-packages/pynq/pl_server/device.py", line 71, in active_device

## raise RuntimeError("No Devices Found")

## RuntimeError: No Devices Found

## Add following lines to driver.py so that pynq is available as root also: vi driver.py ====================================================== import os os.environ["XILINX_XRT"] = "/usr" ======================================================

## Another workaround: sudo -s #source /usr/local/share/pynq-venv/bin/activate export XILINX_XRT="/usr"

CNV Tutorial (CNVW1A1)

Compile Model on Jupyter

Run All on cnv_end2end_example.ipynb

- The ZynqBuild process seems to never end on a virtual machine with 2 vCPUs (i.e., n1-standard-2 on GCP)

- The ZynqBuild process takes about 45-60 minutes on a virtual machine with 4 vCPUs (i.e., n1-standard-4 on GCP)

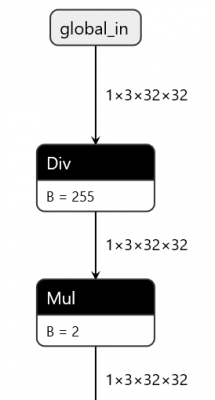

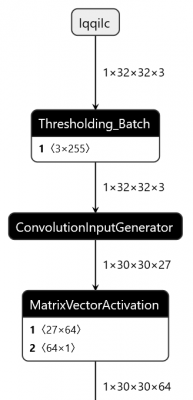

Original Network =  Folded =

Folded =

PYNQ Deployment

## Copy and Unzip unzip deploy-on-pynq-cnv.zip -d finn-cnv-demo cd finn-cnv-demo

## Deployment on PYNQ Board sudo python3 -m pip install bitstring sudo python3 driver.py --exec_mode=execute --batchsize=1 --bitfile=resizer.bit --inputfile=input.npy

## Validating Accuracy sudo pip3 install git+https://github.com/fbcotter/dataset_loading.git@0.0.4#egg=dataset_loading sudo python3 validate.py --dataset cifar10 --batchsize 1000

batch 1 / 10 : total OK 851 NOK 149 batch 2 / 10 : total OK 1683 NOK 317 batch 3 / 10 : total OK 2522 NOK 478 batch 4 / 10 : total OK 3370 NOK 630 batch 5 / 10 : total OK 4207 NOK 793 batch 6 / 10 : total OK 5044 NOK 956 batch 7 / 10 : total OK 5887 NOK 1113 batch 8 / 10 : total OK 6728 NOK 1272 batch 9 / 10 : total OK 7570 NOK 1430 batch 10 / 10 : total OK 8419 NOK 1581 Final accuracy: 84.190000

## Throughput Test sudo python3 driver.py --exec_mode=throughput_test --batchsize=1000 --bitfile=resizer.bit

Results written to nw_metrics.txt

{

'runtime[ms]': 500.66256523132324,

'throughput[images/s]': 1997.3532463685713,

'DRAM_in_bandwidth[MB/s]': 6.1358691728442505,

'DRAM_out_bandwidth[MB/s]': 0.0019973532463685713,

'fclk[mhz]': 100.0,

'batch_size': 1000,

'fold_input[ms]': 0.1494884490966797,

'pack_input[ms]': 0.09703636169433594,

'copy_input_data_to_device[ms]': 19.453763961791992,

'copy_output_data_from_device[ms]': 0.25844573974609375,

'unpack_output[ms]': 0.9322166442871094,

'unfold_output[ms]': 0.17309188842773438

}